Kubernetes: why all this hype?

Since a few years, Docker and Kubernetes are very hot topics. Theses two technologies, and many other related technologies, are growing quickly. My goal in this article is to explain why Kubernetes is such a big deal. I will explain what is so important when maintaining software in production, that Kubernetes helps having.

Before diving into technical details, and for those who don’t know Kubernetes or system administration, I will first go back a little in time and explain in simple terms what is needed to operate software in production in order to fulfill users expectations. Then, I will introduce the basic features offered by Kubernetes and why it is being more and more used by system administrators (also called operations people, or ops, often opposed to development people or dev).

History of software in production

Since software systems are responding to user queries, the goal of system administrators have been to maximize the uptime of their systems.

In order to achieve this goal, great care have been taken on isolating the software and making sure it works on the underlying system, not matter what happens. Indeed, many breakdowns are due to integration issues: something else was changed and has interfered with the main system, causing it to fail.

Throughout history, more and more abstraction layers have been built:

- At the beginning, systems were designed for a specific hardware: all machines were different, and software was programmed with a language (called assembly) specific to the hardware.

- Then, a first abstraction layer allowed systems to work on any physical host: computers were standardized, and operating systems took care of the few differences. Computer started becoming powerful enough to host several applications, and issues started to appear.

- Then, another abstraction layer, Virtual Machines, allowed several systems to run on the same physical host without interfering with each other. At this point, system administrators started to try to isolate applications, but VM are very heavy so it is not possible to have only one application per VM.

- Today, Containers, are the latest and lightest abstraction layer. The philosophy is to split an application as much as possible in as many containers as possible. This creates a lot of containers, but allow very fine grained control over the instance of each sub-system of the application.

The progression have been toward lighter systems (in terms of required computation power and memory). This gives faster application installation/startup/update times and lower costs (because less hardware is needed, and less humans to operate everything). This also allow more automation, which reduce even more the number of humans required.

Note: while many companies are using containers for production systems, some people believe containers are not secure enough. I don’t want to get into the debate in this article, but I might write something about it in the future.

Handling containers with an orchestrator

Every company has a lot of applications, and each application brings in a lot of containers. Sometimes, even an application have duplicated containers: multiple instances to cope with traffic or enable high availability for example. This leads to a lot of containers and many physical hosts too, which all need to be managed.

Theses many containers assigned to many hosts are a distributed system and each resource needs to be assigned and distributed among all theses containers. More specifically, these resources are:

- Computing (CPU and memory):

- Binpacking: assigning tasks on different servers to keep the load on each server similar.

- Network:

- Service discovery: route requests to the relevant service, without knowing if it’s up or his address beforehand.

- Load balancing: distribute requests to equalize the load among all the instances of a given application.

- Disk:

- Configuration management: update all the instances of an application at once, from one common source.

- Secret: similar to configuration, but with secret data such as passwords.

- Storage: save persistent data and allow new containers that are started to find and access them.

Soon, theses resources can’t be managed manually. Given a specific scale, which comes very quickly, an automatic system is needed.

This automatic system is the cluster OS, or called differently, an orchestrator: it is a centralized tool used to operate services which are distributed in containers spread over several machines.

With an orchestrator, we can easily implement nice features, which push automation even further:

- Self-healing: when a container or a physical host is malfunctioning, the orchestrator can restart the failed applications elsewhere.

- Horizontal scaling: to cope with increasing traffic, the orchestrator can start several instances of an application.

- Automated rollouts and rollbacks: when updating an application, the orchestrator can redirect requests to healthy instances as they come up and destroy the old ones when they get no more requests.

Orchestrators come in all sizes and shapes, a few examples: Nomad, Openshift, Rancher, Mesos, etc. Without getting into details, theses all have on thing in common: they are owned by a company, and not built by the community. They were also developed before containers were a thing, and their support for containers is either absent or added afterward and therefore not well integrated.

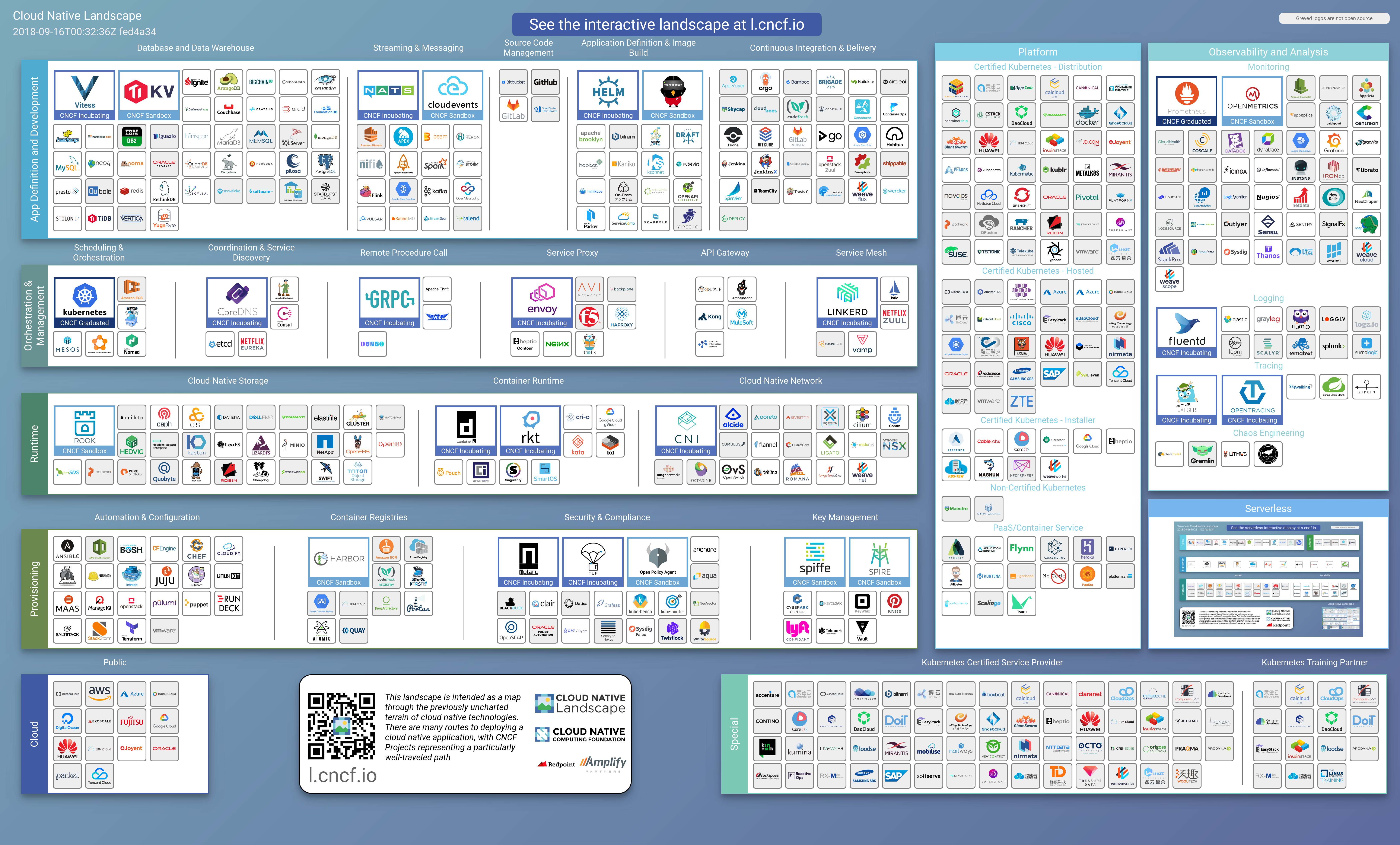

Kubernetes is different: it was developed initially by Googlers, with the experience of Borg, which is Google’s proprietary orchestrator. But it was fully open source from the beginning, and there was the will to give it fully to the community. Indeed, it was donated to the CNCF, the Cloud Native Computing Foundation. A lot of companies and technologies (open or closed source) joined the CNCF and are building together this cloud native ecosystem.

So, what’s the big deal with Kubernetes?

Kubernetes is the perfect answer to all the requirements that system administrators can have. Kubernetes allows automation, collaboration and reuse of the community’s work.

Containers

As we said, containers (and Docker) are becoming the de facto standard to deploy applications. The features are centered around the automation of all the life cycle of applications: build, test and deploy.

Containers give the system administrator confidence in his applications, for many reasons. First, containers are isolated, which means that once it works, it will keep working, no matter what changes happen on the system. Second, containers are Read-Only so their internal state depends only on external data, and it is easy to track which version of the application is running (a new version implies a new container). Lastly, containers are lightweight, which allow fast startup and easy automation. This is the main reason why containers are such a success among developers.

Kubernetes

Kubernetes is also becoming the de facto standard for orchestration.

- Kubernetes is open source and owned by the CNCF:

- It already has a huge community, which is still growing very fast

- A lot of companies and communities are working together around standards and compatibility (lot’s of opportunities and biz to do!1)

- Kubernetes has a modular architecture:

- It uses containers underneath, initially Docker, but more and more container (or VM) runtimes are added: gVisor, Kata containers, …

- Kubernetes is extensible through CRD and plugins2

- Every component of the control plane can be changed independently

- Kubernetes is built with a modern architecture:

- Kubernetes components are modular and can be swapped out

- It’s API is simple and usable

Conclusion

This is long enough already, so let’s keep it simple and short:

- Kubernetes is awesome, it allows to push automation very far.

- I think there are a lot of opportunities: new technologies and businesses will continue to grow alongside Kubernetes.

- Beware of the complexity, Kubernetes does not solve everything!

-

You’ve got an idea? Let’s talk! ↩︎